All Categories

Featured

Table of Contents

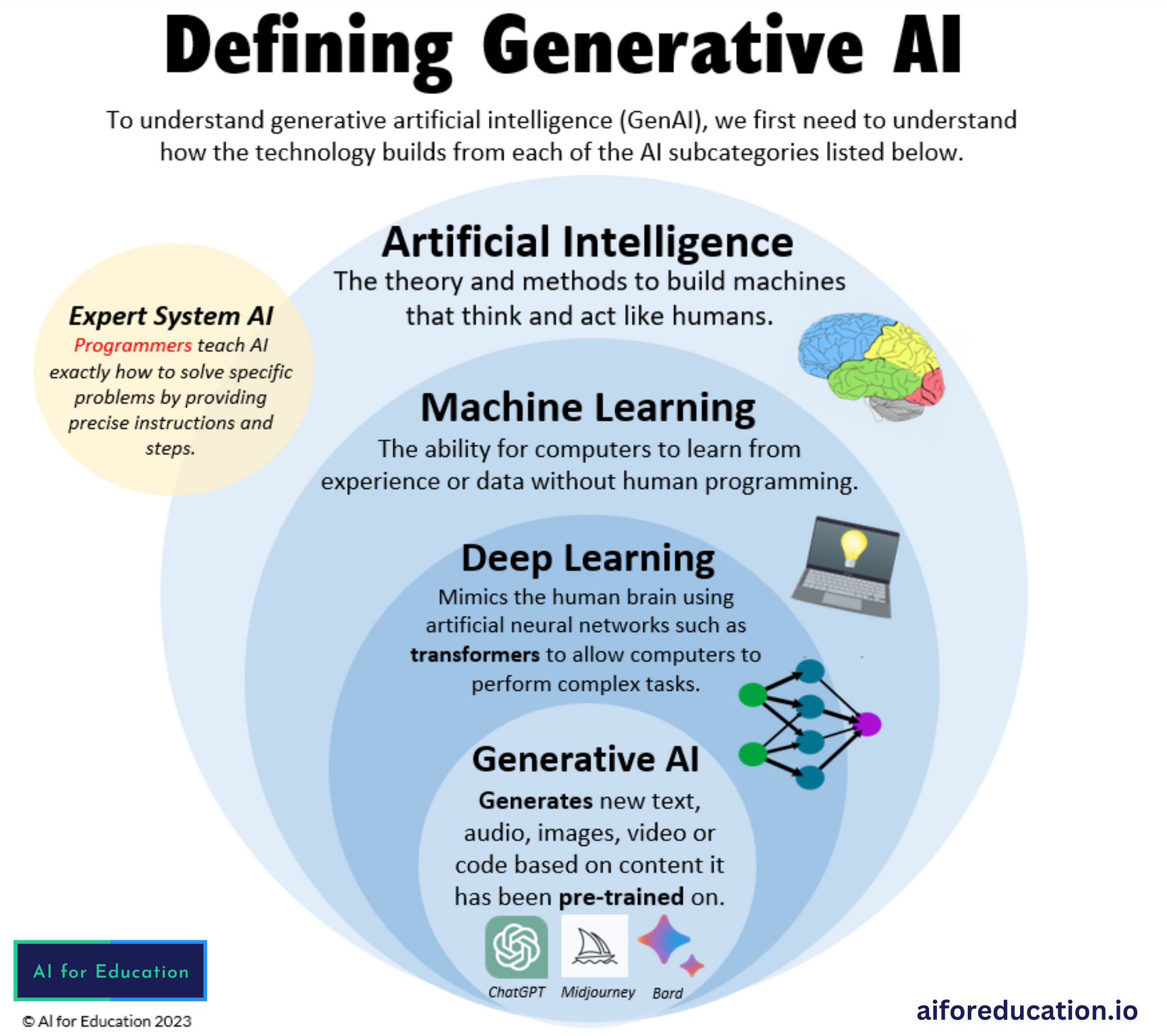

For instance, such versions are trained, utilizing millions of instances, to anticipate whether a specific X-ray reveals indications of a growth or if a specific consumer is likely to default on a loan. Generative AI can be considered a machine-learning version that is educated to produce new information, as opposed to making a prediction concerning a certain dataset.

"When it concerns the actual equipment underlying generative AI and various other kinds of AI, the differences can be a little blurred. Frequently, the same formulas can be made use of for both," says Phillip Isola, an associate teacher of electric design and computer science at MIT, and a participant of the Computer Science and Expert System Laboratory (CSAIL).

However one large distinction is that ChatGPT is much bigger and extra complex, with billions of criteria. And it has been trained on a substantial quantity of data in this case, much of the publicly available message on the net. In this big corpus of text, words and sentences appear in series with certain dependencies.

It finds out the patterns of these blocks of message and uses this understanding to suggest what may come next. While larger datasets are one catalyst that led to the generative AI boom, a selection of significant research study advances additionally caused even more complicated deep-learning designs. In 2014, a machine-learning architecture understood as a generative adversarial network (GAN) was suggested by scientists at the University of Montreal.

The generator attempts to trick the discriminator, and in the process discovers to make even more reasonable outcomes. The image generator StyleGAN is based upon these kinds of versions. Diffusion models were presented a year later by researchers at Stanford University and the University of California at Berkeley. By iteratively refining their outcome, these models discover to produce brand-new data examples that resemble samples in a training dataset, and have been used to create realistic-looking photos.

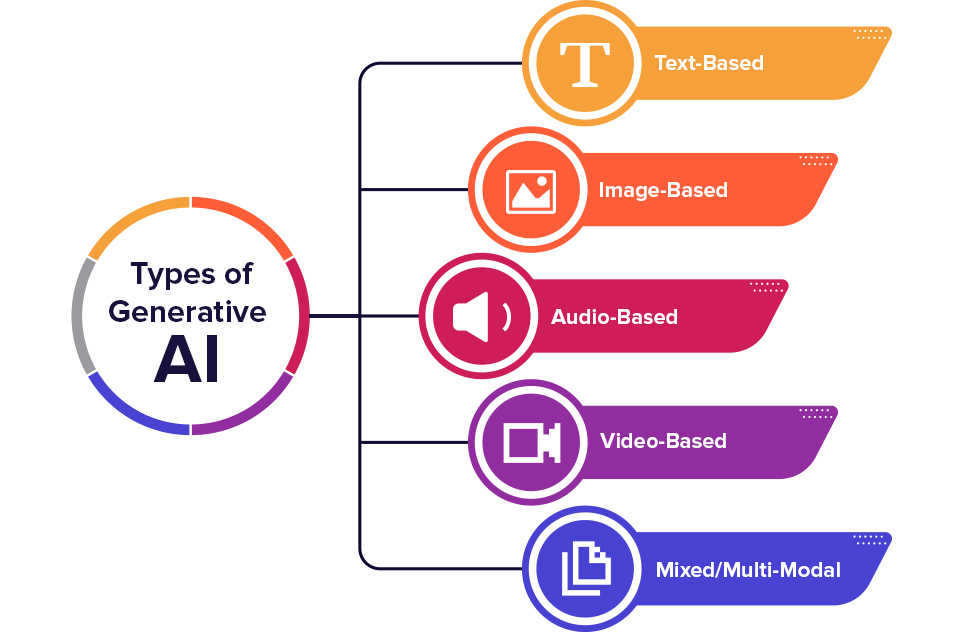

These are just a couple of of numerous strategies that can be utilized for generative AI. What all of these strategies share is that they transform inputs right into a set of tokens, which are numerical depictions of pieces of data. As long as your information can be converted into this requirement, token layout, after that in theory, you can apply these methods to generate new information that look similar.

What Is Multimodal Ai?

While generative versions can achieve amazing results, they aren't the ideal selection for all types of information. For tasks that entail making predictions on organized data, like the tabular information in a spread sheet, generative AI models have a tendency to be outmatched by conventional machine-learning techniques, states Devavrat Shah, the Andrew and Erna Viterbi Teacher in Electrical Design and Computer Technology at MIT and a member of IDSS and of the Research laboratory for Info and Choice Solutions.

Formerly, humans needed to speak to makers in the language of makers to make things take place (How do AI chatbots work?). Currently, this interface has actually determined just how to speak to both people and devices," says Shah. Generative AI chatbots are currently being made use of in phone call facilities to field concerns from human clients, yet this application emphasizes one prospective red flag of implementing these designs employee variation

How Does Ai Impact Privacy?

One promising future instructions Isola sees for generative AI is its usage for fabrication. Rather of having a model make a picture of a chair, possibly it can create a plan for a chair that can be generated. He likewise sees future usages for generative AI systems in creating much more normally intelligent AI agents.

We have the capability to think and dream in our heads, to come up with fascinating ideas or strategies, and I think generative AI is among the devices that will certainly encourage representatives to do that, as well," Isola states.

Ai Training Platforms

Two extra current developments that will be gone over in even more detail below have played an important part in generative AI going mainstream: transformers and the innovation language versions they allowed. Transformers are a sort of artificial intelligence that made it feasible for scientists to educate ever-larger designs without needing to classify all of the information ahead of time.

This is the basis for devices like Dall-E that instantly produce pictures from a text description or produce text subtitles from images. These innovations regardless of, we are still in the very early days of using generative AI to create legible text and photorealistic elegant graphics. Early implementations have actually had issues with precision and prejudice, in addition to being prone to hallucinations and spewing back odd answers.

Moving forward, this modern technology might assist compose code, layout brand-new drugs, develop items, redesign service processes and transform supply chains. Generative AI starts with a prompt that could be in the form of a text, an image, a video clip, a style, musical notes, or any kind of input that the AI system can process.

Scientists have actually been producing AI and other devices for programmatically producing web content since the early days of AI. The earliest approaches, called rule-based systems and later on as "expert systems," made use of explicitly crafted regulations for generating responses or information collections. Semantic networks, which develop the basis of much of the AI and device knowing applications today, flipped the problem around.

Created in the 1950s and 1960s, the first semantic networks were limited by an absence of computational power and little data sets. It was not until the introduction of huge data in the mid-2000s and improvements in computer system equipment that semantic networks came to be functional for producing web content. The field increased when researchers found a means to get neural networks to run in parallel across the graphics refining systems (GPUs) that were being utilized in the computer video gaming sector to render video clip games.

ChatGPT, Dall-E and Gemini (formerly Bard) are popular generative AI user interfaces. Dall-E. Educated on a huge data collection of images and their connected text summaries, Dall-E is an example of a multimodal AI application that recognizes links throughout numerous media, such as vision, text and audio. In this instance, it connects the meaning of words to aesthetic components.

Ai-driven Customer Service

It allows users to create images in multiple styles driven by individual prompts. ChatGPT. The AI-powered chatbot that took the globe by storm in November 2022 was constructed on OpenAI's GPT-3.5 execution.

Latest Posts

Can Ai Think Like Humans?

How Does Ai Create Art?

How Do Ai And Machine Learning Differ?